⚡ Query Performance Tuning: ORDER BY from 20s to 119ms in Microsoft SQL Server

Last week we noticed that in some of our production environments, a request was performing very bad times, more than 20 seconds to complete. After analyzing the case we could reduce this to 119 milliseconds. I would like to share the steps we made to reach this:

Note: For performance benchmarks, I created similar code with random data in this exampe.

Context

After analyzing some metrics, the first thing on the table was to consider using a cache. But, wait, this seems more like a solution instead of the root cause of the problem right? Let’s take a look at what we have here:

We got an endpoint that returns all product operations details with the most recent data. To get all this information, we do 3 steps:

- Get all the ProductIds from an external source, for example, files in disk.

- For each product, we execute a query to get the most recent product details from a MSSQL database.

- We merge all data and send the response.

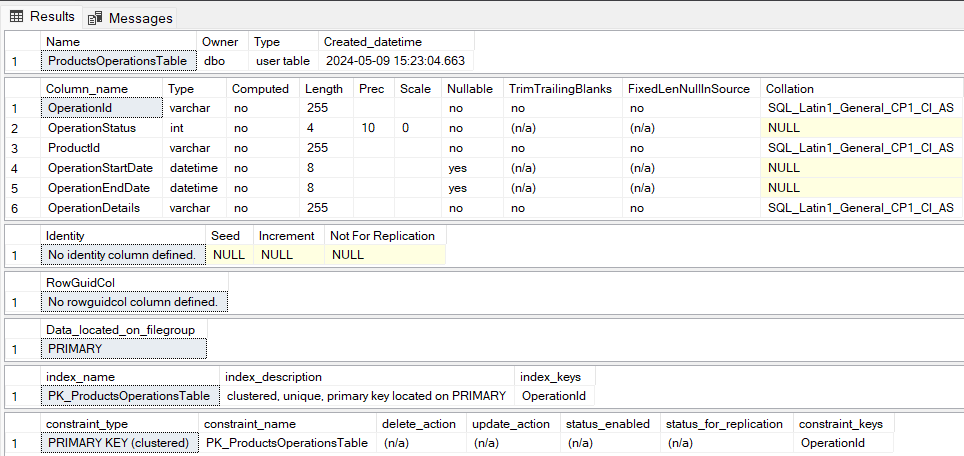

The table of the step 2 dbo.ProductsOperationsTable looks something like this:

Finding the root cause

The problem was found in certain production environments with several amount of products and operations. We decided to automatize a few load tests with BenchmarkDotnets and after reproducing the error locally we noticed that the time was growing linearly (Aprox: 50 products 2 seconds, 500 products 20 seconds all over 80k operations, around 42 million rows scanned).

On the other hand, we compared the amount of time invested in I/O operations to get all the PipelineIds from disk vs the amount of time invested to query all data from the database. We found that the bottleneck was the database (Aprox: 500 products 0.0734 milliseconds for I/O operations reading files from disk and around 20 seconds for the database queries).

Also, we noticed that the query we were performing in step 2 was filtering by columns without indexes:

SELECT TOP 1

OperationId,

OperationStatus,

ProductId,

OperationStartDate,

OperationEndDate,

OperationDetails

FROM

dbo.{DB.ProductsOperationsTable}

WHERE

ProductId = @ProductId

ORDER BY

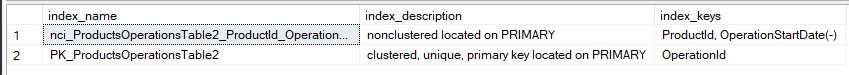

OperationStartDate DESCAdding the right index

We could compare 3 different options of index here (over 500 products):

- Index by ProductID 10 seconds

- Index by StartDate DESC 30 seconds

- Compose Index by (ProductID, StartDate DESC) 10 seconds

As we saw, we were filtering by ProductId and then by StartDate in a desc way, and by comparing the results we found that the compose Index and the ProductID Index were the best way to go.

But. Why the Order Index is not working? Well, after reading this post about Pipelined/Indexed ORDER BY for best performance, we noticed that we were using the wrong query. When using a composed index, to get the advantage of the two indexes while filtering, we need to specify both columns in order from left to right, like a pure B-tree search.

SELECT TOP 1

OperationId,

OperationStatus,

ProductId,

OperationStartDate,

OperationEndDate,

OperationDetails

FROM

dbo.{DB.ProductsOperationsTable2}

WHERE

ProductId = @ProductId

ORDER BY

ProductId, OperationStartDate DESCWith this update, we could reduce the query time to 10 seconds.

But what about latency

According to the original algorithm, what happens if there is latency? We are performing 500 queries, so performance could be highly degraded. To solve this possible problem, we decided to compare other two different solutions:

- Using chunks, for example, query 25 by 25 Products. 5475 milliseconds

- Run all queries together, by querying all ProductsIds at the same time. 2315.3 milliseconds

After comparing them, we decided to run all queries together in this case. We also compare client memory for this case and see no huge difference in memory allocation between strategies. In the end, here we went from N Query executions and SQLConnection usage to just 1.

With this update, we could reduce the query time arround 2 seconds.

Bonus: use an optimized function instead of a complex query

If data is ordered, consider use an optimized function. During this performance tuning process, we noticed a huge gain in performance is we replaced the TOP 1 Order by StartDate desc filter with just a MAX aggregate function.

SELECT

OperationId,

OperationStatus,

ProductId,

OperationStartDate,

OperationEndDate,

OperationDetails

FROM

dbo.{DB.ProductsOperationsTable2} PO2

WHERE

ProductId IN ( @ProductIds )

AND OperationStartDate = (

SELECT

MAX(OperationStartDate)

FROM

dbo.{DB.ProductsOperationsTable2}

WHERE

ProductId = PO2.ProductId

)- Using chunks, for example, query 25 by 25 Products. 1110.1 milliseconds

- Run all queries together, by querying all ProductsIds at the same time. 119.43 milliseconds

MAX function is optimized, if we need to process an operation over a data structure, that is already ordered. The operation can be optimized by directly selecting the first or the last element in the structure, instead of traversing to all the indexes.

Time was reduced to 119 milliseconds for 500 products over 80k product operations.

Conclusions

- If we were to start with a cache, it would never solve the problem, we just will be moving it into another place. The query performance will still have the same problem, and also data is not real-time.

- Is important to use the right index.

- When we are looping multiple queries we should take into account that latency can have a huge impact on general performance.

- When dealing with sorting requirements, having the data “pre-sorted” in the desired order can result in a huge performance boost, specifically if we correctly specify this in the query 🙂.

- Some aggregation functions like MAX have performance optimizations under the hood if they are run against ordered data.